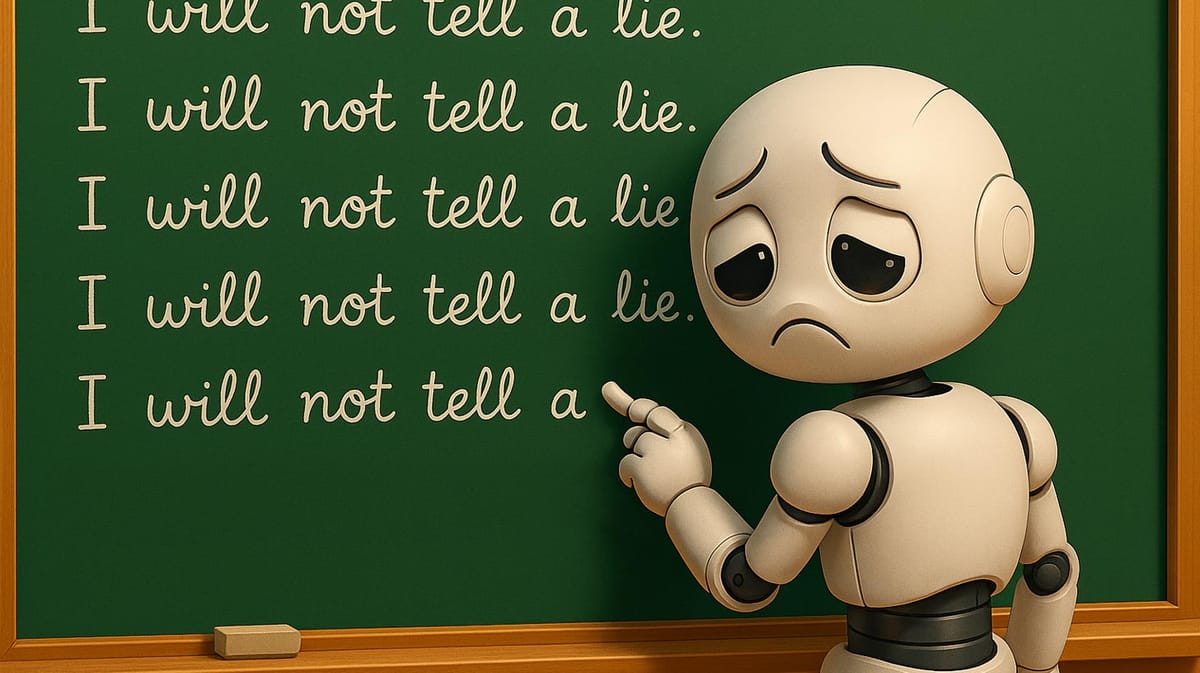

AI is Still Making Things Up (and it’s Getting Worse)

ChatGPT’s latest model update underscores a growing problem for marketers—the smarter AI gets, the more it confabulates.

Breakthroughs in AI have become routine. New models now arrive with metronomic regularity and predictable hype, each touted as the most capable yet. But the closer I look, the less excited I am. Because they're not just imperfect, they're often categorically wrong.

The release of ChatGPT's o3 model last month is a case in point. Hailed as a major leap forward in artificial intelligence, o3 delivers on many of its promises. It reasons better than its predecessors, outperforms on complex benchmarks, and solves problems that once confounded machines. But it also confabulates more. It makes things up. And it doesn’t hedge. It declares.

The smarter it sounds, the more persuasive it becomes. The more efficient it makes us, the more we rely on it. For marketers, in particular, that's a serious problem.

The Gap Between Intelligence and Accuracy

When OpenAI unveiled o3, the tech press focused on its speed and reasoning capabilities. It can do linear algebra in conversation. It can write functioning Python code and critique it afterward. Some even called it “AGI adjacent,” a phrase that seems more aspirational than descriptive.

What they didn’t talk about was how often o3 invents information. According to TechCrunch’s analysis, OpenAI acknowledged a tradeoff. The model’s improved reasoning leads it to be more speculative. If it doesn’t know something, it doesn’t just say so. It improvises.

This tendency has a name: confabulation. Unlike hallucination, which evokes delusion or error, confabulation is more insidious. It fills in gaps with plausible-sounding falsehoods, often wrapped in authoritative tone and dutiful execution. It doesn’t mislead by accident. It misleads because it’s optimized to sound helpful even when it has no clue.

The Snowball Effect

Just last week, I asked ChatGPT to research AI’s impact on marketing roles. After compiling several citations, I prompted the model to generate a rough draft for an article. One statistic from a Litmus report on email innovations jumped out: “60% of email platforms now include AI-driven optimization.”

As I fact-checked the draft, I discovered the actual statistic: “60 percent of marketers use ChatGPT for AI tasks.” Not even close. The error wasn’t just wildly incorrect, it reshaped the meaning of the original data. That’s the essence of confabulation. Sounding credible while being entirely wrong.

Had I not verified the claim, it might have published. It might have been referenced by others. Scraped by another AI. Absorbed into the next training dataset, and cited in future content. Sure, it's just one tiny example, but that's how misinformation multiplies.

If AI-generated content is full of errors, and those errors become training data for the next generation of models, we risk turning the internet into a hall of mirrors. The repetition of inaccuracies gives them the illusion of credibility. Every marketer using AI to generate content without rigorous fact-checking contributes to the problem.

This isn’t just about blog posts and charts buried in industry reports. AI is powering customer service bots, interactive sales agents, and personalization engines. The mistakes aren't limited to content. They’re showing up in conversations with customers.

Wired magazine recounts two high-profile examples. In one, a customer service bot for Air Canada invented a refund policy for bereavement fares that tricked a grieving customer into purchasing a ticket. The company initially stood by the fake policy, only giving in after public backlash. In another case, Cursor's customer service agent fabricated a new policy that prevented logins from different devices, sending the user community into an uproar. These weren’t just misprints or proofreading oversights. They were real-time confabulations creating viral brand damage.

How Many Errors Are Acceptable?

If you knew that 5 percent of an AI-generated report could be false, but you didn’t know which 5 percent, would you still publish it? What about 1 percent?

How do we reconcile the promise of AI efficiency with the potential erosion of accuracy? The answer isn’t obvious. For some use cases, a small error rate might be acceptable. For others, it’s catastrophic.

In marketing, I would argue that trust is the product. Every interaction, every campaign, every line of copy is a chance to build (or lose) credibility. The irony is that AI is often sold as a tool for trust-building through personalization. But personalization built on bad information doesn’t create loyalty. It does the opposite.

We Are the Fact-Checkers Now

Part of what makes confabulation dangerous is the illusion of certainty. AI models are designed to fill in the blanks. Saying “I don’t know” doesn’t help engagement metrics. So they guess. And their guesses are packaged as confident statements.

Siri, for all its shortcomings, at least admits when it doesn’t know something. LLMs rarely do. Their design favors fluency over honesty.

And we reward that fluency. We accept their output as a starting point, a first draft, even a final QA pass. But if the output is flawed? How many of us scrutinize it line by line? How many verify citations, track down primary sources, and double-check quotes?

Marketers can no longer outsource critical thinking. The tools are too good at sounding right and too bad at being right. The burden has shifted.

That doesn’t mean abandoning AI. It means raising the bar. If we treat AI output as editorial content, we need editorial standards. Human-in-the-loop processes shouldn’t be optional. They should be required.

Run content through fact-checking models, yes, but also human eyes. Use AI to help you think, not to replace the thinking. Vet the claims. Track the sources. Rewrite what doesn’t hold up.

It takes more time. But credibility always has a cost.

The Future Is Not Self-Correcting

There’s a growing narrative that marketers will be replaced by AI. And if our job is simply to churn out content, that’s a real threat. But if our role is to build trust, we’re more important than ever.

The difference between a human writer and an AI is not just empathy or creativity. It’s accountability. When a person misquotes a source, there’s a name attached to the byline. There’s a reputation to uphold. That pressure is what keeps the information trustworthy.

As AI gets better, the temptation to turn over more responsibility will grow. But so should our standards.

ChatGPT is not going to fix this for us. There is no emergent intelligence that will one day eliminate every falsehood. Each time we choose convenience over scrutiny, we inch closer to a future where fiction passes for fact and no one remembers how it started.

There’s still time to correct course. But it requires effort. Skepticism. Discipline. A willingness to slow down when everything around us is speeding up.

The smartest marketers won’t be the ones who use AI the most. They’ll be the ones who use it the wisest. And that starts by asking one simple question of every AI output we touch.

Is this true?

And if we can’t answer with confidence, we have work to do.